Hi everyone,

When I send multiple POST request simultaneously I’ll get this response on all request except the last one:

error: MutationFailure::MutationError::InvalidSuccessor.

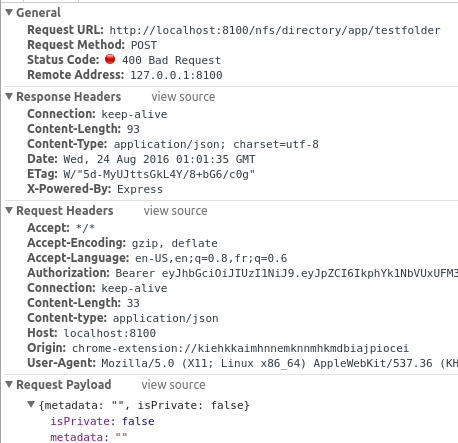

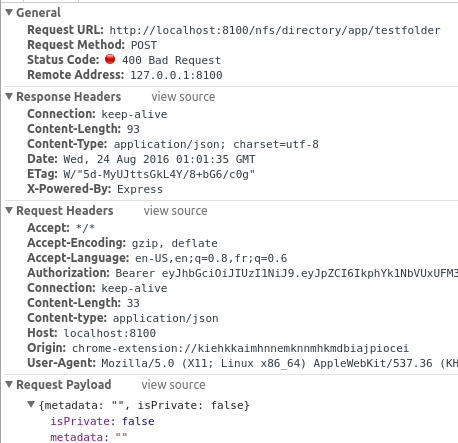

This is what the request looks like:

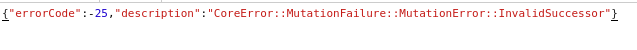

And this is the response I get:

Doing one request at the time and waiting for the answer before sending another one works well.

Any idea?

This is similar to double spend protection. If you try and mutate quickly the group has not yet agreed on new packet (or owner) and will not reach consensus. When it does the mutation is “atomic” and set in stone. So to be sure an element is updated you should wait on a response or do a read after write on the data, otherwise the network can see it as invalid. Only a valid successor will work (so version +1 and signatures match for ownership or transfer).

[Edit you can see the main call here https://github.com/maidsafe/routing/blob/master/src/structured_data.rs#L108 ]

1 Like

Thanks for the reply, I understand better what the error code means now. That said I also get the same error when I try to POST two different file simultaneously. For example these two requests produce the same error even though they target different files.

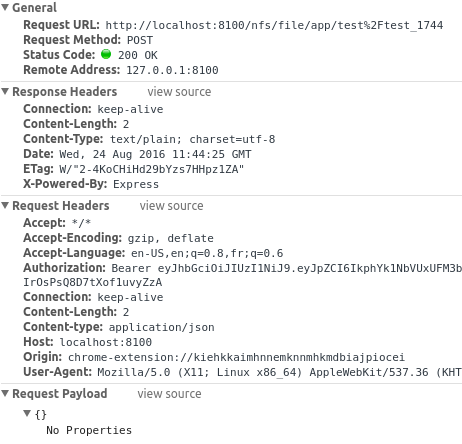

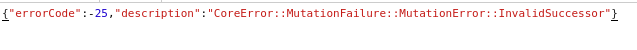

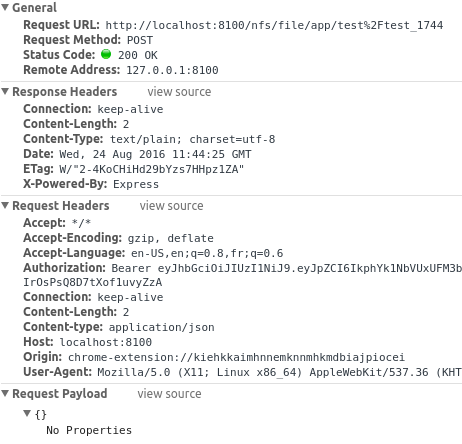

The first file goes through without problem:

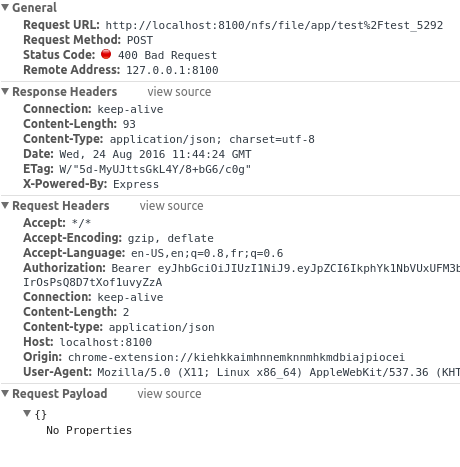

The second file gives the error {“errorCode”:-25,“description”:“CoreError::MutationFailure::MutationError::InvalidSuccessor”}

A simple use case of what I’m trying to do is uploading a files in bulk. At this moment I cannot do it, I need to wait to get the response for each request before sending a new one.

Thanks for the help!

1 Like

@Krishna may be the best bet here, I am still not as familiar with client API yet, I will be soon though

1 Like

The NFS directory holds the files. NFS directory is a structured data and cannot be mutated simultaneously. When a file is saved in the network, the data of the file is Self Encrypted and stored as Immutable data and the resulting data map is saved in the Directory Listing (Structured Data) and this Directory listing is finally saved to the network.

When two files belonging to the same directory are updated simultaneously the same structured data is mutated in parallel which results in this error.

At present it would be better to update the files one after the other. Even in the demo app, the files are created in the network one after the other and not simultaneously.

5 Likes

I notice that you can read in parallel though, so you can get file details simultaneously, which is faster in many cases.

Yes, reading files simultaneously should work fine. Only concurrent mutations are not possible at present.

1 Like

Presumably, it would be possible to bundle puts together as one operation to avoid this limitation in the future?

I see, would it work if I create a sub-directory for each files? Not optimal but for my use case it shouldn’t matter much.

Also is there plans to improve this? For example by adding a queue in the launcher to stack all similar request and to execute them one by one so the app doesn’t need to handle it?

Either way it’s good to know and probably worth documenting somewhere.

I just ran into this same problem when creating both files and supdirectories.

Given the explanation above I guess that modifications to each directory level needs to be serialised due to how it’s all stored internally.

The complete thread to me reads like a bug report for the launcher api implementation. It’s leaking implementation details to clients and should quite obviously be responsible for handling the necessary serialisation.

1 Like

I am not in favour of this approach, because this only resolves concurrent handling while running on a single machine.

Said that, I am not ruling it out completely too. Let me check with @ustulation, if something can be done better.

I’ve been struggling to implement some sensible locking scheme here that’s more flexible than serialising all nfs modifications.

It’s not going very well. Based upon your above reply I assumed that “per directory” locks would be sufficient but that doesn’t really seem to be the case.

My most recent failure looks like this,

Rows are: :sequence-number, :locked-path :modified-path

14]: app/www/dir-1/dir-2/dir-3 for app/www/dir-1/dir-2/dir-3/index.html started.

11]: app/www/dir-1/dir-2/dir-3/dir-4 for app/www/dir-1/dir-2/dir-3/dir-4/empty.txt started.

18]: app/www/dir-1 for app/www/dir-1/index.html started.

boom -> 21]: app/www/dir-2 for app/www/dir-2/dir-3 started.

That is while uploading files to www/dir-1 and a few sub directories I get a InvalidSuccessor error when creating a subdirectory in www/dir-2

This is just one permutation of the problem, But they share the same essential property that modifications to a different directory (although having a shared parent somewhere along the line) causes it to fail.

Is this to be expected? and if so, how far “up” does this go? Should I expect different applications to clash in the same way?

That seems like it would essentially lock us down to a single active write per account at a global level?

When a directory/file is updated, the metadata of the modified directory also gets updated in its parent directory. The current directory and its parent is updated.

This should be just one level up

If the directory is shared in safe drive and different applications access the same for modification then this is certainly possible.

1 Like

@Krishna thanks!

Knowing that it bubbles up to the parent level I finally have a stable solution in place.

For anyone else attempting this the key thing here is that if you imagine “per directory” locks you almost always want to grab two locks. That is you want to lock the parent and the target directory.

2 Likes